All papers that have not been peer-reviewed will not appear here, including preprints. You can access my all of papers at 🔗Google Scholar.

2025

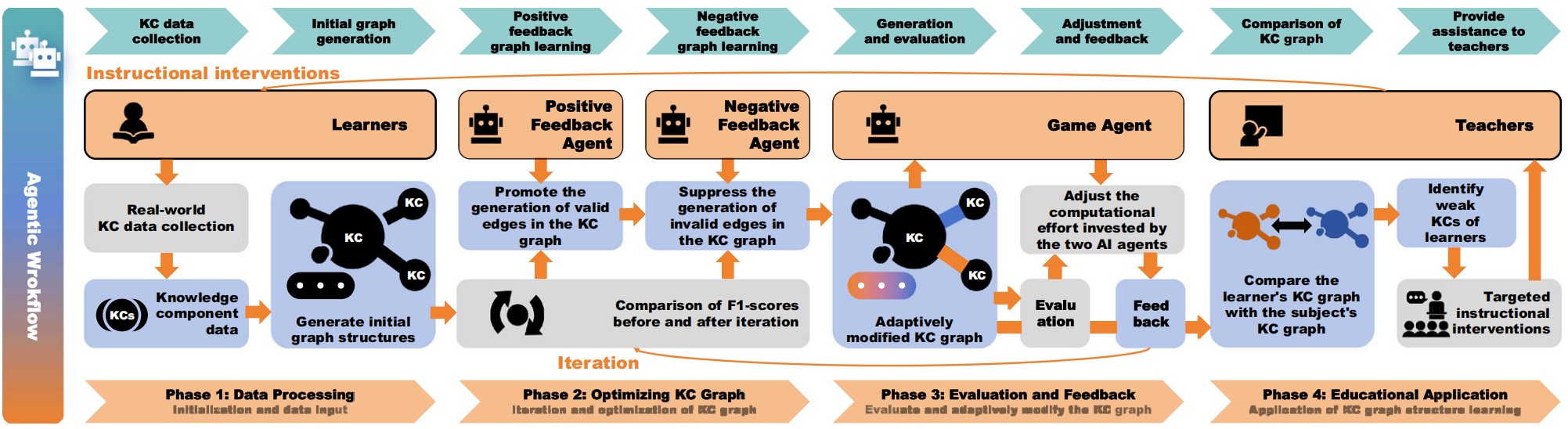

MAS-KCL: Knowledge Component Graph Structure Learning with Large Language Model-Based Agentic Workflow

SCI Q2 CCF-C EI-Indexed Journal

Journal The Visual Computer, 2025

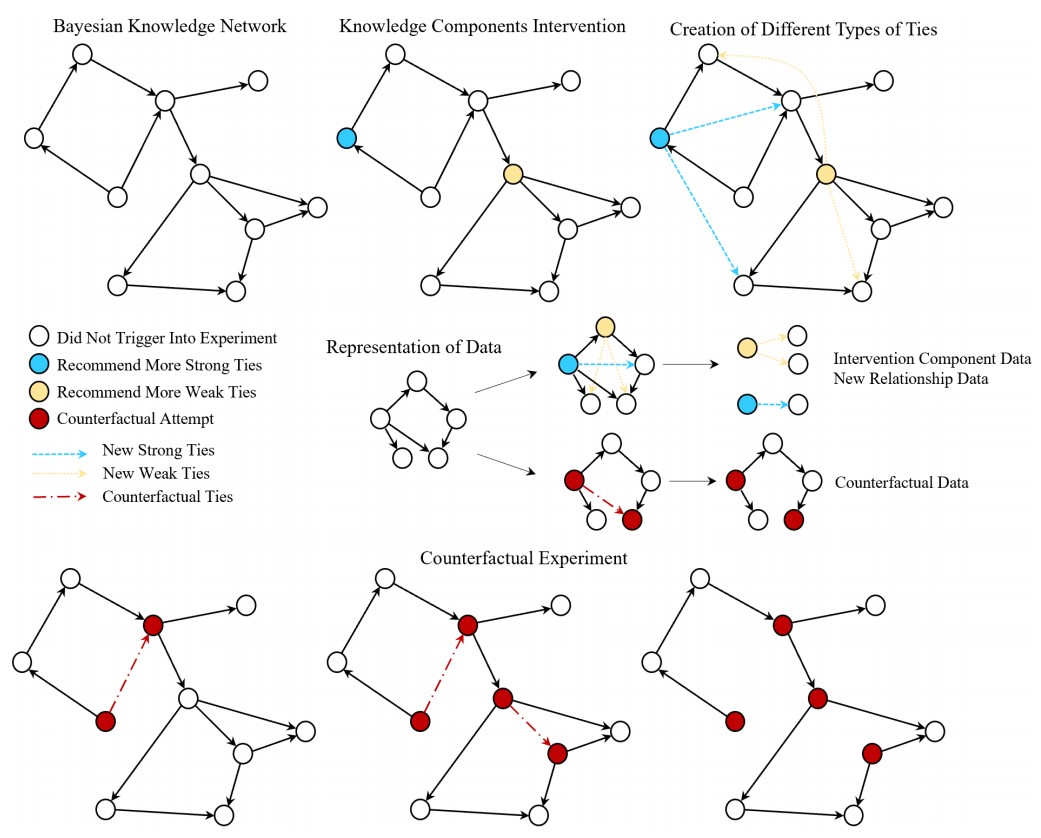

Knowledge components (KCs) are the fundamental units of knowledge in education. A KC graph illustrates the relationships and dependencies between KCs. An accurate KC graph helps educators identify the root causes of learners’ poor performance on specific KCs, enabling targeted instructional interventions. We developed MAS-KCL, a KC graph structure learning algorithm that uses a multi-agent system driven by large language models for adaptive optimization of the KC graph. A bidirectional feedback mechanism is integrated to assess the value of edges and optimize graph structure learning efficiency. We validated this approach on both synthetic and real-world educational datasets, showing its effectiveness in learning path recognition, allowing teachers to design more targeted and effective learning plans.

@article{2025-1_jiang_mas-kcl, title = {{MAS}-{KCL}: knowledge component graph structure learning with large language model-based agentic workflow}, issn = {1432-2315}, shorttitle = {{MAS}-{KCL}}, url = {https://doi.org/10.1007/s00371-025-03946-1}, doi = {10.1007/s00371-025-03946-1}, language = {en}, journal = {The Visual Computer}, author = {Jiang, Yuan-Hao and Tang, Kezong and Chen, Zi-Wei and Wei, Yuang and Liu, Tian-Yi and Wu, Jiayi}, month = may, year = {2025}, }

Copied!

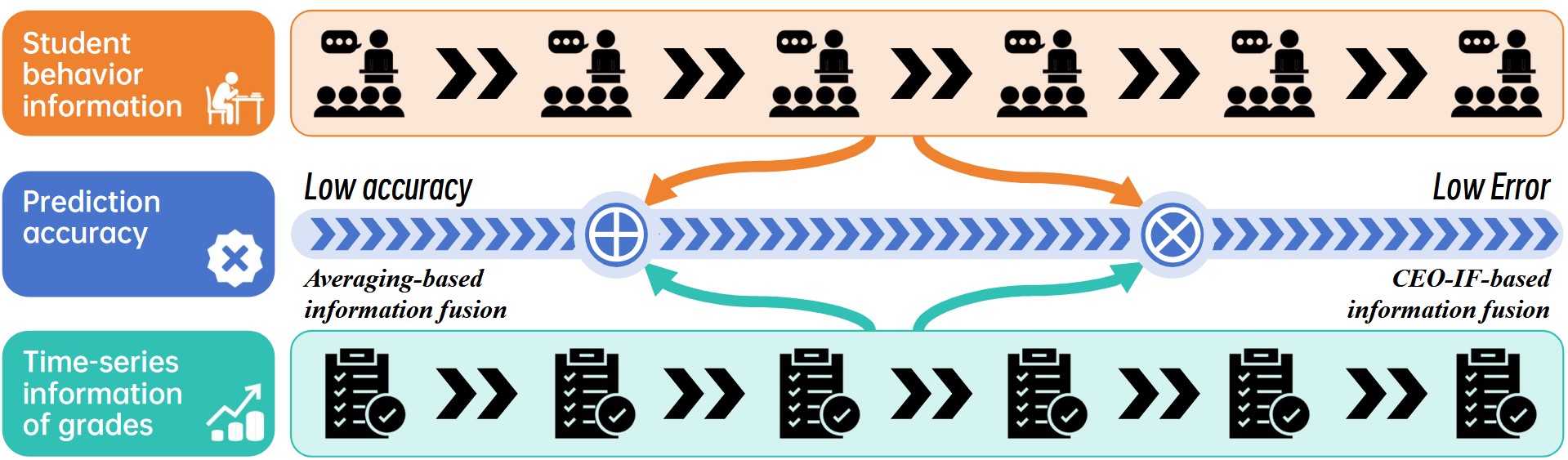

Explainable Learning Outcomes Prediction: Information Fusion Based on Grades Time-Series and Student Behaviors

CCF-A core-A* THCPL A

Conference ACM CHI Conference on Human Factors in Computing Systems (CHI 2025), 2025

Accurately and timely predicting learners’ outcomes can assist educators in making instructional decisions or interventions. This helps prevent students from falling into a vicious cycle of decreased academic achievement and increased aversion to learning, potentially leading to dropout. Data-driven models often outperform eXplainable Artificial Intelligence (XAI) models in predicting learning outcomes, yet their lack of interpretability can hinder trust from educators. Therefore, this study developed an XAI information fusion framework that not only extracts potential trends from the time series of student grades to enhance predictive performance but also mines explicit relationships between classroom behaviors and learning outcomes. This reveals the behavioral causes behind changes in grades. Furthermore, we have made public the Dataset for Predicting Outcomes from Time sequences and Student behaviors (DPOTS), and validated the effectiveness of the developed XAI information fusion framework based on DPOTS. The results indicate that, the Mean Absolute Error (MAE) of CEO-IF was reduced by an average of 26.32% compared to the baseline algorithms, and it showed a 22.63% reduction compared to the averaging-based information fusion method. The homepage for the project can be accessed at https://doi.org/10.5281/zenodo.14958102.

@inproceedings{2025-2_jiang_explainable,address = {New York, NY, USA},series = {{CHI} {EA} '25},title = {Explainable Learning Outcomes Prediction: {Information} Fusion Based on Grades Time-Series and Student Behaviors},isbn = {979-8-4007-1395-8},doi = {10.1145/3706599.3721212},language = {en-US},booktitle = {Proceedings of the {Extended} {Abstracts} of the {CHI} {Conference} on {Human} {Factors} in {Computing} {Systems}},publisher = {Association for Computing Machinery},author = {Jiang, Yuan-Hao and Chen, Zi-Wei and Zhao, Cong and Tang, Kezong and Duan, Jicong and Zhou, Yizhou},month = apr,year = {2025},pages = {1--11},}

Copied!

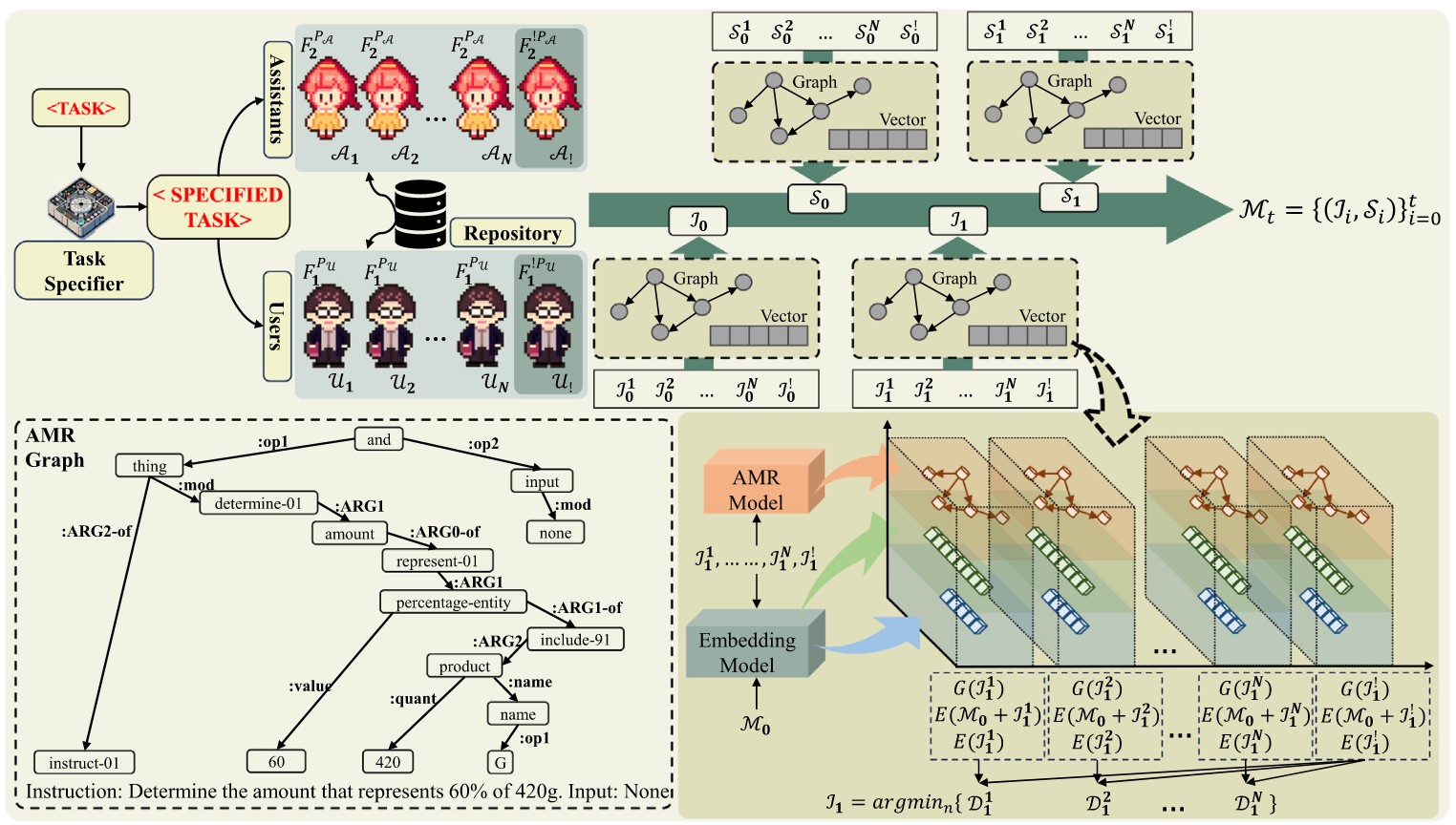

Mitigating Reasoning Hallucination Through Multi-Agent Collaborative Filtering

TOP SCI Q1 IF = 7.5 CCF-C EI-Indexed Journal

Journal Expert Systems with Applications, 2025

Large language models (LLMs) have demonstrated excellent performance in various natural language tasks. However, in practical applications, LLMs frequently exhibit hallucinations, generating content that deviates from instructions or facts, especially in complex reasoning tasks. Existing research has simulated real human behavior by utilizing multi-agent debate, voting, and review, enhancing the model’s reasoning capabilities. However, simple multi-agent systems have not accomplished the progressive verification of all reasoning steps. Additionally, the issues of unstable response quality and the continuous learning ability of agents have not been addressed. Therefore, in this work, we propose a Multi-agent Collaborative Filtering framework (MCF) in the form of cross-examination among agents. This aims to cross-verify each step while filtering and selecting the highest-quality responses from the response space. Additionally, to enable agents to achieve continuous learning capabilities, this paper proposes methods for the automated construction and efficient retrieval of the experience repository. Extensive experiments on ten reasoning datasets of three types (Arithmetic, Commonsense, and Symbolic) indicate that MCF can enhance the diversity of large language models, overcome hallucinations, and filter out effective responses in a rich response space. Moreover, the improvement of agents’ reasoning capabilities through the experience repository is also verified. Compared to the state-of-the-art, the method proposed in this paper shows superior performance.

@article{2025-3_shi_mitigating, title = {Mitigating Reasoning Hallucination Through Multi-Agent Collaborative Filtering}, volume = {263}, issn = {0957-4174}, doi = {10.1016/j.eswa.2024.125723}, language = {en-US}, number = {2025}, journal = {Expert Systems with Applications}, author = {Shi, Jinxin and Zhao, Jiabao and Wu, Xingjiao and Xu, Ruyi and Jiang, Yuan-Hao and He, Liang}, month = mar, year = {2025}, pages = {125723},}

Copied!

2024

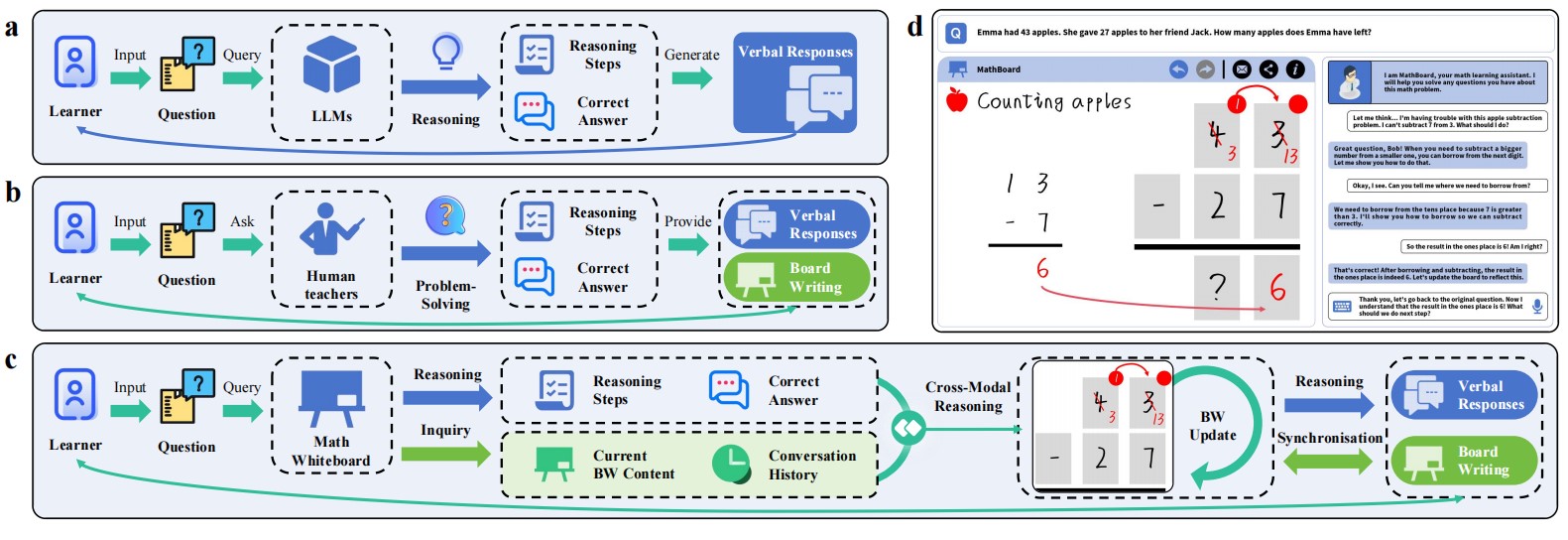

Synchronizing Verbal Responses and Board Writing for Multimodal Math Instruction with LLMs

CCF-A

Conference NeurIPS'24: Conference and Workshop on Neural Information Processing Systems, the 4th Workshop on Mathematical Reasoning and AI, 2024

The advancement of large language models (LLMs) has greatly facilitated math instruction, with the generated textual content serving as verbal responses to address student inquiries. However, in instructional settings, teachers often provide both verbal responses and board writing (BW) simultaneously to enhance students' knowledge construction. To address this, we introduce MathBoard, a multimodal large language model (MLLM) designed for elementary mathematics education, which progressively generates BW. Our study focuses on the provision of BW to learners, aiming to reduce their cognitive load effectively. Furthermore, MathBoard can be integrated with other approaches that enhance mathematical reasoning capabilities. An empirical study involving 34 pre-service teachers demonstrated that the multimodal interactions facilitated by MathBoard were more highly accepted and impactful across various dimensions compared to text-only interactions, significantly promoting learners' social construction of knowledge.

@inproceedings{2024-5_jiang_synchronizing, title = {Synchronizing Verbal Responses and Board Writing for Multimodal Math Instruction with LLMs}, booktitle = {NeurIPS'24: Conference and Workshop on Neural Information Processing Systems, the 4th Workshop on Mathematical Reasoning and AI}, address = {Vancouver, Canada}, publisher = {Neural Information Processing Systems Foundation}, author = {Jiang, Yuan-Hao and Li, Ruijia and Wei, Yuang and Jia, Rui and Shao, Xiaobao and Hu, Hanglei and Jiang, Bo}, year = {2024}, pages = {46--59}, url = {https://openreview.net/forum?id=esbIrV8N12}, }

Copied!

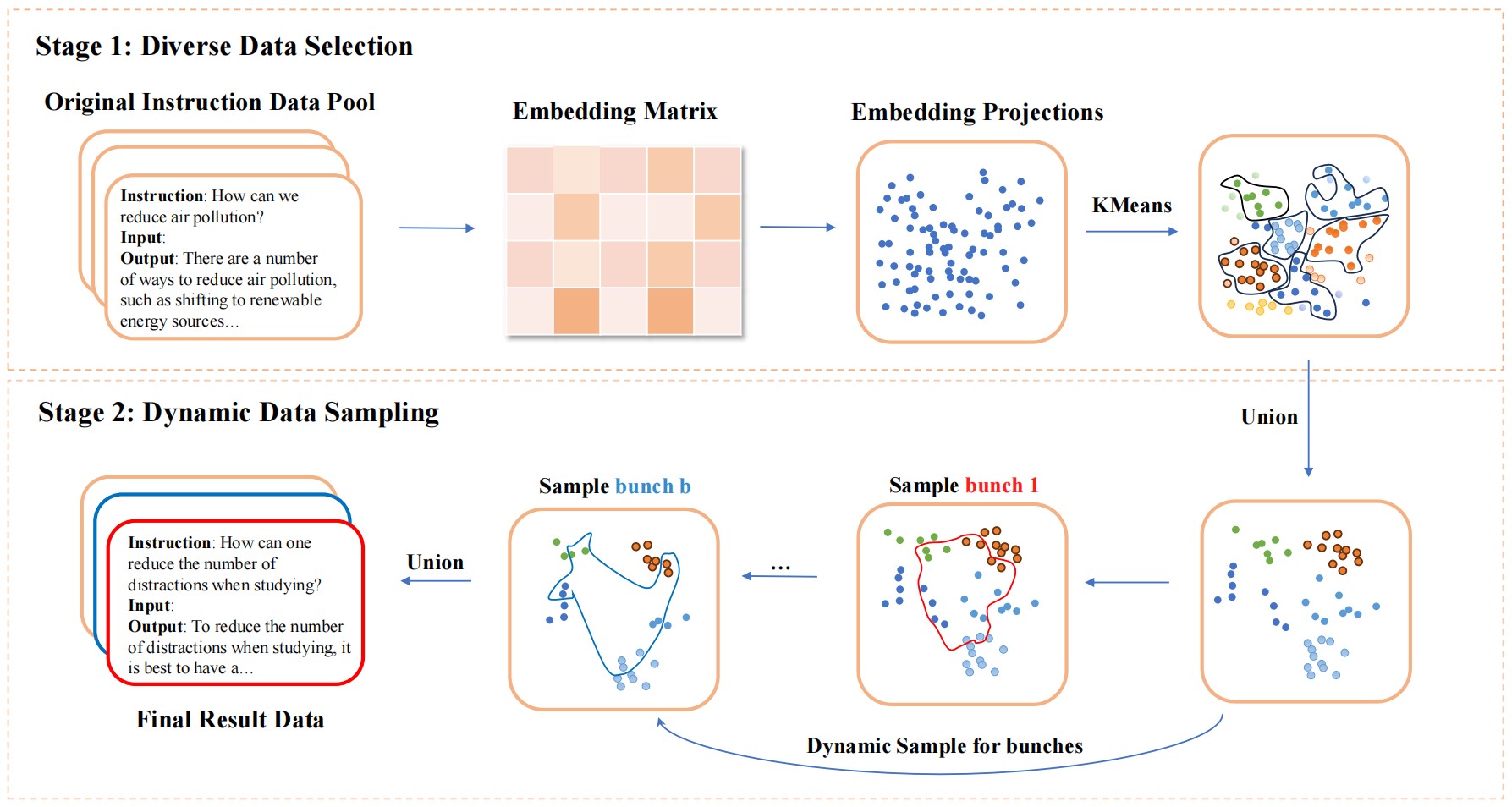

Bread: A Hybrid Approach for Instruction Data Mining through Balanced Retrieval and Dynamic Data Sampling

CCF-C

Xinlin Zhuang,

Xin Mao, Yuan-Hao Jiang,

Hongyi Wu,

Shangqing Zhao,

Li Cai,

Shu Liu,

Yang Chen,

Yuxiang Song,

Chenghao Jia,

Yuhao Zhou,

Man Lan

Conference The 13th CCF International Conference on Natural Language Processing and Chinese Computing (NLPCC 2024), 2024

Recent advancements in Instruction Tuning (IT) have shown promise for aligning Large Language Models (LLMs) with users' intentions, yet its efficacy is often compromised by dependence on high-quality datasets. Previous works have concentrated on the aggregation or production of huge IT datasets through human labor or significant cost-intensive LLM APIs, which lacks adequate mechanisms to guarantee the quality of the resulting data. Moreover, training on such amount of IT data is both time-consuming and costly. To address these issues, we present Bread (Instruction Mining through Balanced Retrieval And Dynamic Data Sampling), a novel approach designed to minimize the requisite volume of IT data. Bread uses a two-stage strategy combining balanced retrieval and dynamic sampling to focus on data diversity and quality, offering a cost-saving solution without relying on any specific LLMs. Experimental results suggest that Bread outperforms baselines and shows great flexibility across various IT datasets and LLMs, thereby marking a step forward in efficient Instruction Tuning.

@inproceedings{2024-7_zhuang_bread, title = {Bread: A Hybrid Approach for Instruction Data Mining through Balanced Retrieval and Dynamic Data Sampling}, booktitle = {The 13th CCF International Conference on Natural Language Processing and Chinese Computing (NLPCC 2024)}, author = {Zhuang, Xinlin and Mao, Xin and Jiang, Yuan-Hao and Wu, Hongyi and Zhao, Shangqing and Cai, Li and Liu, Shu and Chen, Yang and Song, Yuxiang and Jia, Chenghao and Zhou, Yuhao and Lan, Man}, year = {2024}, month = {nov}, pages = {229--240}, doi = {doi.org/10.1007/978-981-97-9434-8_18}, language = {en}, }

Copied!

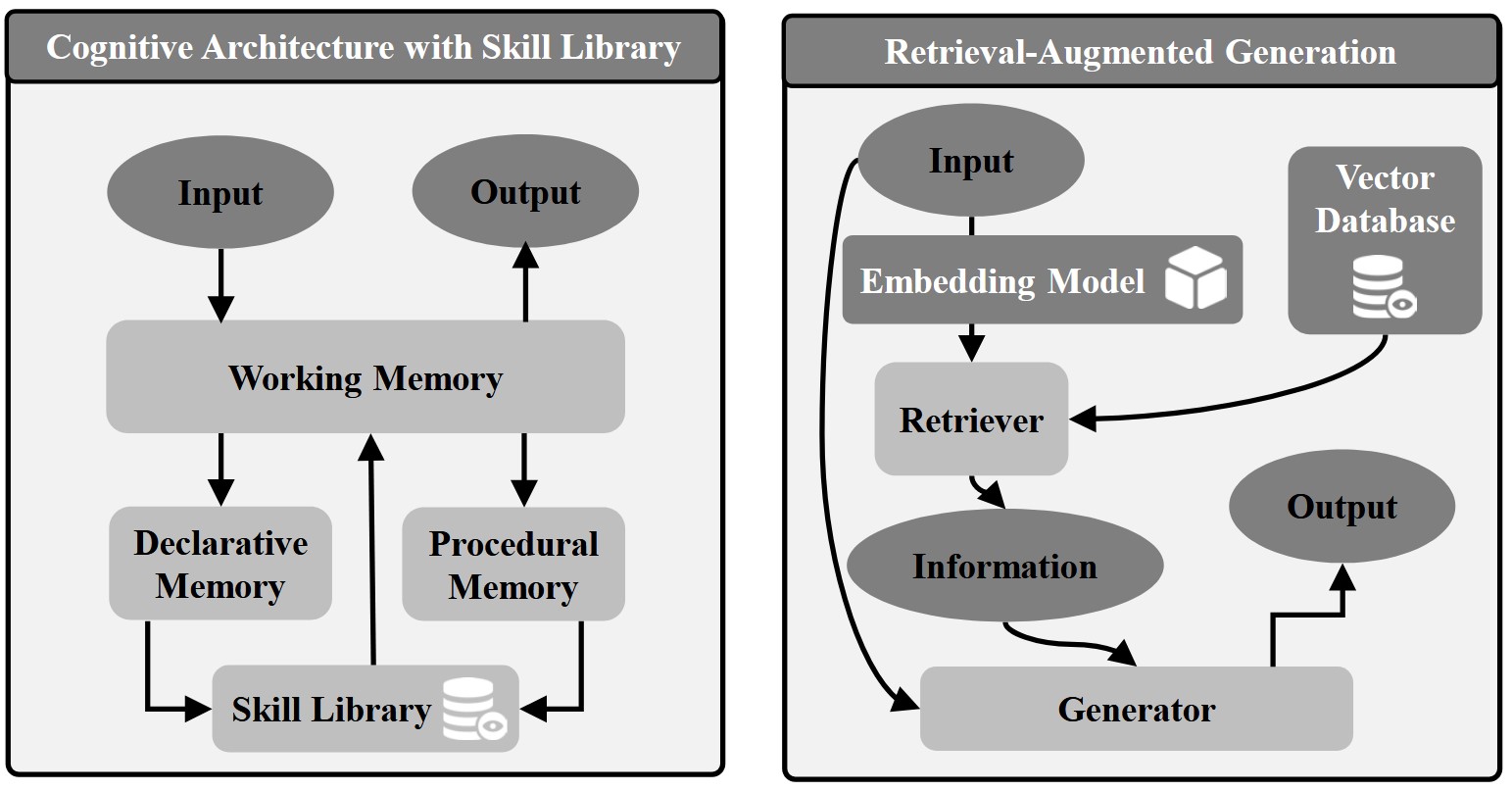

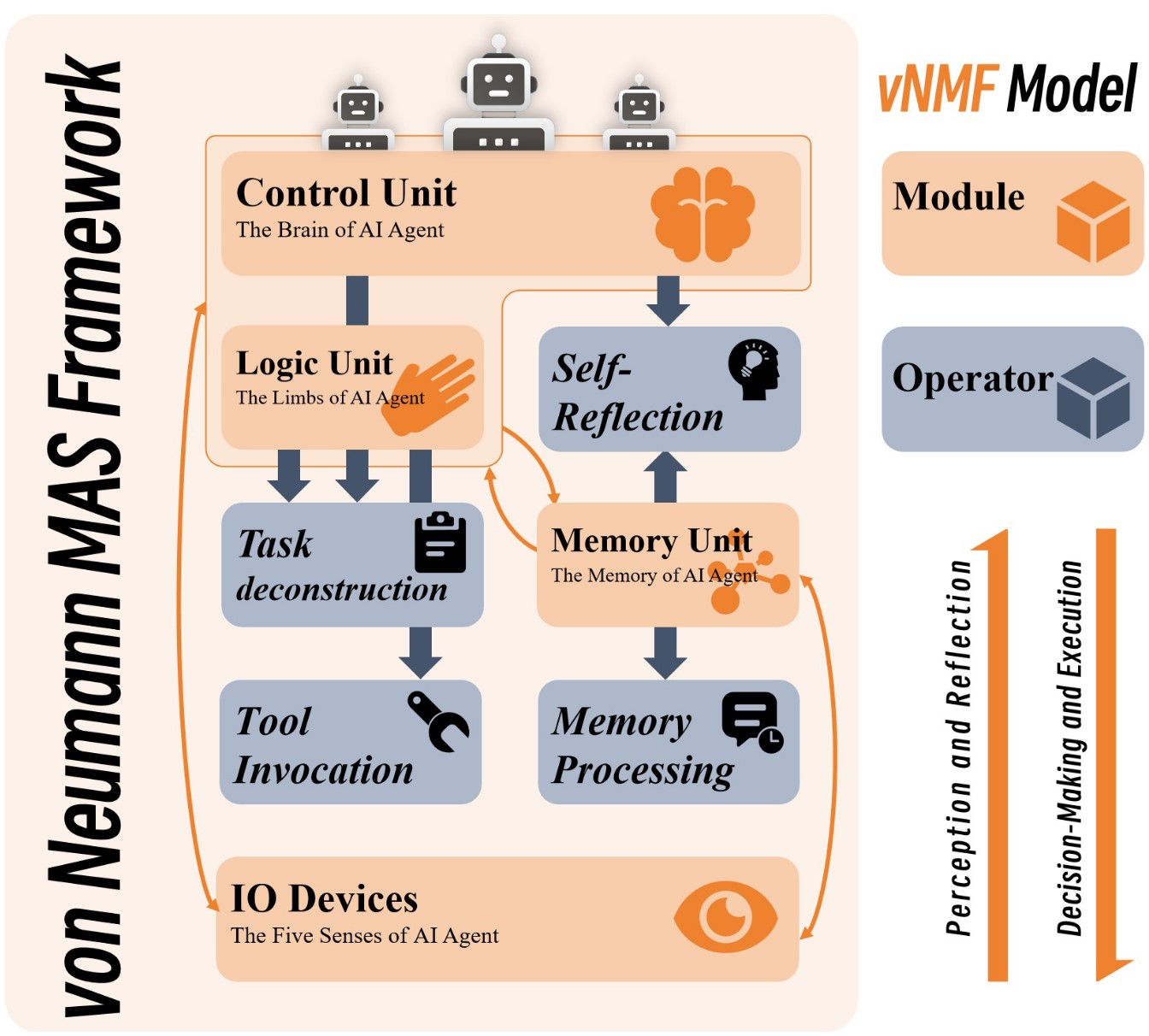

For Learners: AI Agent is All You Need

Book Chapter Nova Science Publishers: New York, USA, 2024

The evolution of Large Language Models (LLMs) has heralded a new era in education. Moreover, by endowing Artificial Intelligence (AI) Agents with natural language processing (NLP) capabilities via the utilization of LLMs, AI Agents can actively engage in the learning process, thereby more precisely assessing learning outcomes and offering targeted assistance to learners, introducing novel pedagogical approaches. Drawing inspiration from the von Neumann Machine, this chapter delves into the role of AI Agents in the educational domain and introduces the von Neumann AI Agent Framework, which decomposes each AI Agent into four modules: the control unit, the logic unit, the memory unit, and the input-output devices. Grounded in the modular definition of AI Agents, they can accomplish task decomposition, self-reflection, memory processing, and tool invocation by accessing different modules, ultimately obtaining the desired output from learners. Additionally, we provide visual representations of the technologies associated with these tasks, such as Chain of Thought, Reson+Act, and Multi-Agent Debate. Following this, we examine the utilization of AI Agents in evaluating and improving learning outcomes, while briefly exploring the phenomenon of swarm intelligence that may manifest during the collaborative interactions of multiple AI Agents.

@incollection{2024-9_jiang-for, title = {For Learners: AI Agent is All You Need}, booktitle = {Enhancing Educational Practices: Strategies for Assessing and Improving Learning Outcomes}, address = {New York, NY, USA}, publisher = {Nova Science Publishers}, author = {Jiang, Yuan-Hao and Shi, Jinxin and Tu, Yukun and Zhou, Yizhou and Zhang, Wenxuan and Wei, Yuang}, editor = {Wei, Yuang and Qi, Changyong and Jiang, Yuan-Hao and Dai, Ling}, year = {2024}, isbn = {979-8-89530-030-5}, doi = {https://doi.org/10.52305/RUIG5131}, pages = {21--46}, }

Copied!

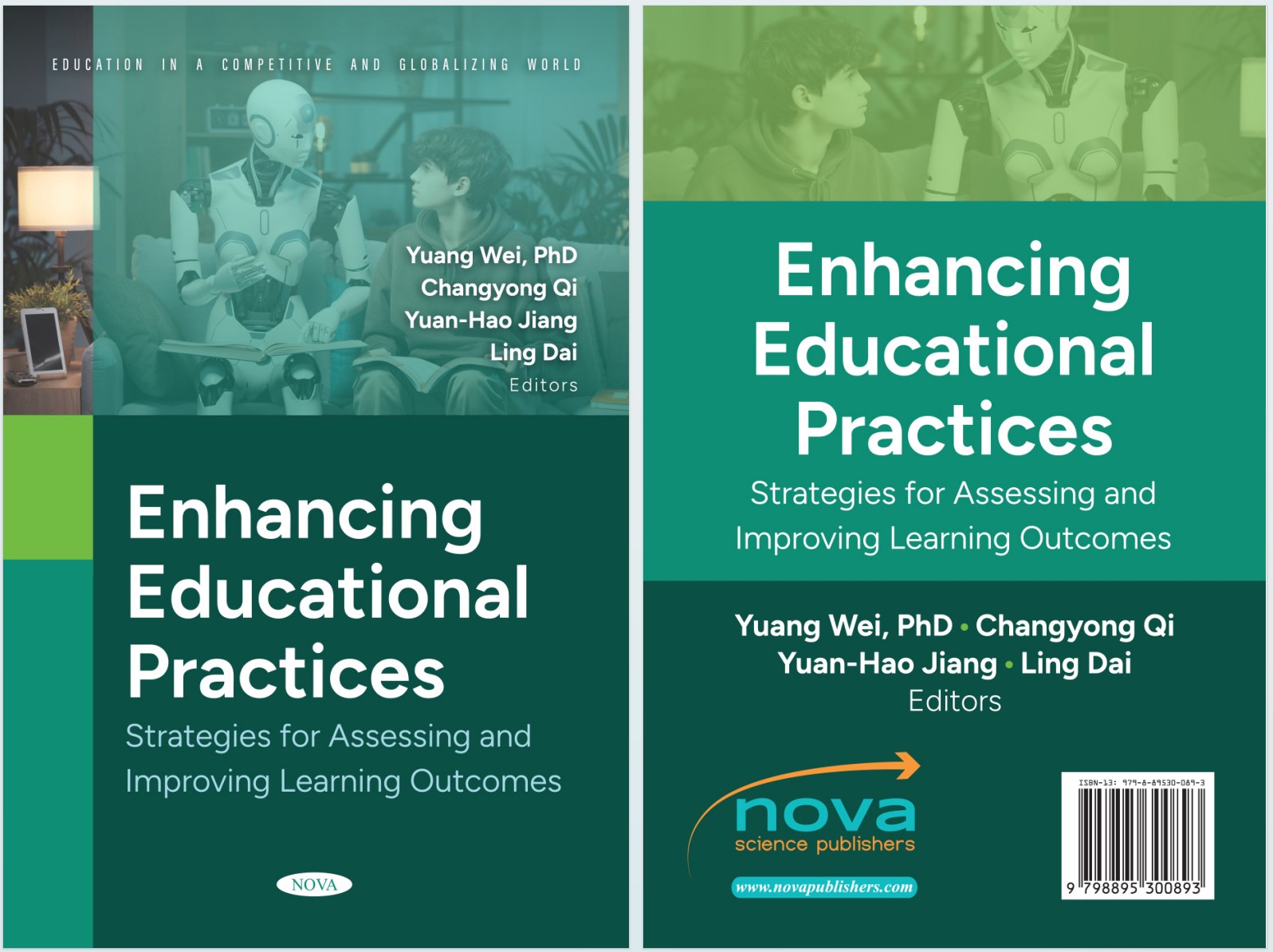

Enhancing Educational Practices: Strategies for Assessing and Improving Learning Outcomes

Editor

MonographNova Science Publishers: New York, USA, 2024

The effective assessment of learning outcomes serves as the cornerstone of educational guidance while improving learning outcomes stands as the central objective of effective teaching. As intelligent technology continues to advance, the field of education must endeavor to develop increasingly personalized, effective, and human-centric approaches to assessing and enhancing learning outcomes. To realize this vision, this book seeks to identify educational realities, dismantle educational barriers using advanced technology, and speculate on future trajectories. Throughout this book, readers will delve into cutting-edge research about the assessment and enhancement of learning outcomes, explore the latest educational technologies for this purpose, and gain a more comprehensive understanding of future research directions. Let us collectively contribute to shaping the future of AI for education.

@book{2024-8_wei_enhancing, title = {Enhancing Educational Practices: Strategies for Assessing and Improving Learning Outcomes}, series = {Education in a Competitive and Globalizing World}, address = {New York, NY, USA}, publisher = {Nova Science Publishers}, editor = {Wei, Yuang and Qi, Changyong and Jiang, Yuan-Hao and Dai, Ling}, year = {2024}, isbn = {979-8-89530-030-5}, doi = {https://doi.org/10.52305/RUIG5131}, }

Copied!

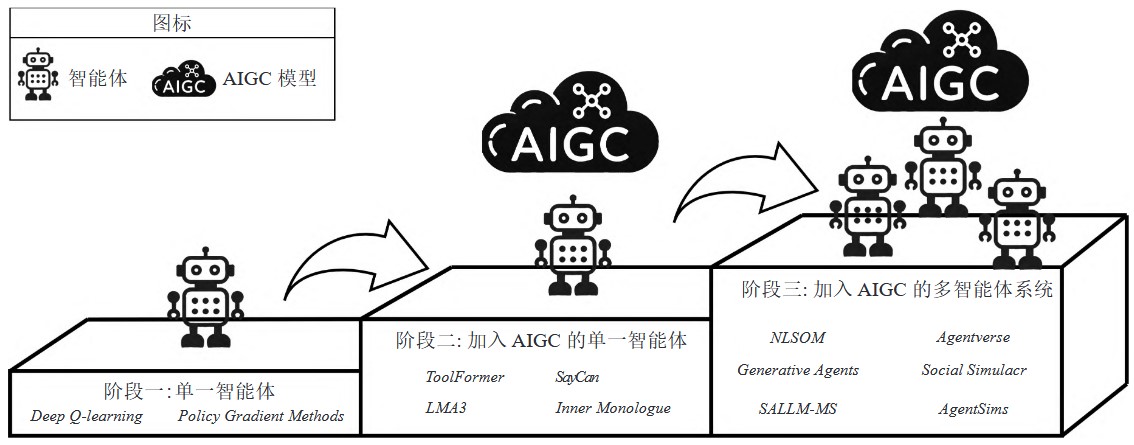

Multi-Agent Systems Supported by Large Language Models: Technical Pathways, Educational Applications, and Future Prospects

Chinese CSSCI

Journal Open Education Research, 2024

The rapid advancement of technology, particularly generative artificial intelligence (GenAI), is facilitating the education transformation. In response to the increasingly complex and dynamic educational landscape, Multi-Agent Systems (MAS) have emerged as a promising solution to address educational challenges due to their collaborative, distributed, and adaptive capabilities. This study begins by analyzing the core principles and evolutionary trajectory of MASs, focusing on their early applications in education. It explores the evolution of these systems from three critical perspectives: Domain, structure, and application scenarios. On the technical front, the study delves into how GenAI enhances MASs by developing an "eye-brain-hand" capability framework using Large Language Models (LLM). Additionally, it introduces a dual-cycle framework to boost the intelligence of these systems. Regarding applications, the study provides an in-depth analysis of the diverse roles of MASs in education, including an encyclopedia-type Agent that facilitates knowledge management, an intelligent learning companion that fosters collaboration, a teaching assistant Agent that aids in learning planning, and a specialized teacher agent that supports subject-specific instruction. The study also highlights the potential of MASs for various educational contexts and cross-cultural environments. However, the implementation of MASs in education faces several challenges, such as ensuring system stability and security, mitigating potential negative impacts, and integrating the strengths of traditional educational methods. To address these issues, the study proposes a range of strategies, including integrating educational elements, aligning with digital infrastructure, transforming educational paradigms, and enhancing security, ethics, and privacy safeguards. These measures reshape the educational ecosystem empowered by MASs and ensure its sustainable development. The study offers meaningful insights into intelligent educational technologies and contributes to the digitalization and high-quality development of education.

@article{2024-1_wu_multi-agent, title = {Multi-Agent Systems Supported by Large Language Models: Technical Pathways, Educational Applications, and Future Prospects}, volume = {30}, doi = {10.13966/j.cnki.kfjyyj.2024.05.007}, language = {zh-CN}, number = {5}, journal = {Open Education Research}, author = {Wu, Yonghe and Jiang, Yuan-Hao and Chen, Yuanyuan and Zhang, Wenxuan}, year = {2024}, pages = {63--75}, }

Copied!

Enhancing Explainability of Knowledge Learning Paths: Causal Knowledge Networks

Conference Joint Proceedings of the Human-Centric eXplainable AI in Education and the Leveraging Large Language Models for Next Generation Educational Technologies Workshops (HEXED-L3MNGET 2024) co-located with 17th International Conference on Educational Data Mining (EDM 2024), 2024

A reliable knowledge structure is a prerequisite for building effective adaptive learning systems and intelligent tutoring systems. Pursuing an explainable and trustworthy knowledge structure, we propose a method for constructing causal knowledge networks. This approach leverages Bayesian networks as a foundation and incorporates causal relationship analysis to derive a causal network. Additionally, we introduce a dependable knowledge-learning path recommendation technique built upon this framework, improving teaching and learning quality while maintaining transparency in the decision-making process.

@inproceedings{2024-4_wei_enhancing, title = {Enhancing Explainability of Knowledge Learning Paths: Causal Knowledge Networks}, booktitle = {Joint Proceedings of the Human-Centric eXplainable AI in Education and the Leveraging Large Language Models for Next Generation Educational Technologies Workshops (HEXED-L3MNGET 2024) co-located with 17th International Conference on Educational Data Mining (EDM 2024)}, address = {Atlanta, Georgia, USA}, publisher = {International Educational Data Mining Society}, author = {Wei, Yuang and Zhou, Yizhou and Jiang, Yuan-Hao and Jiang, Bo}, year = {2024}, volume = {3840}, isbn = {1613-0073}, doi = {10.48550/arXiv.2406.17518}, pages = {9--17}, url = {https://ceur-ws.org/Vol-3840/HEXED24_paper2.pdf}, language = {en}, }

Copied!

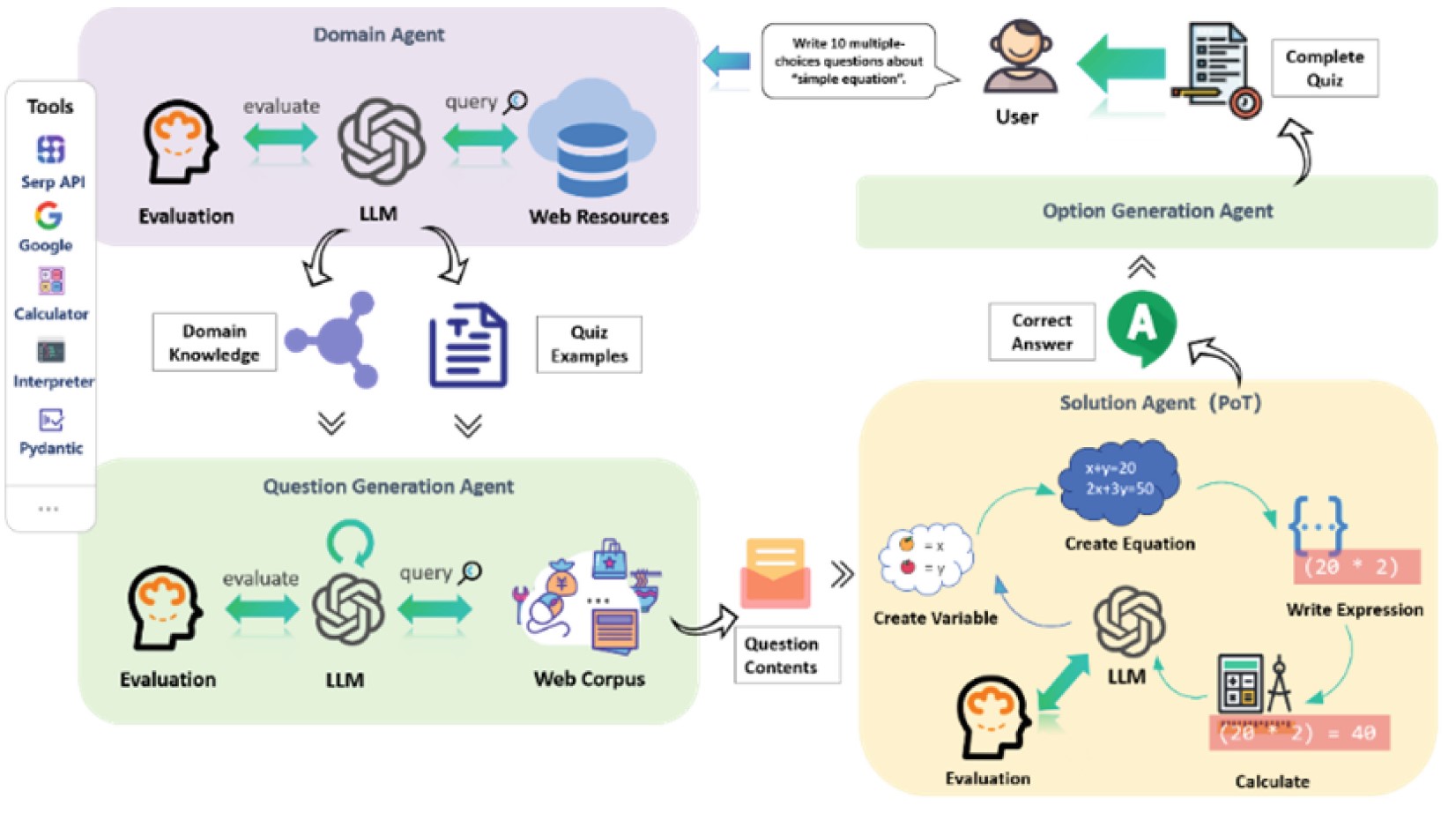

Generating Contextualized Mathematics Multiple-Choice Questions Utilizing Large Language Models

CAAI-A ECNU-Recommended Education Conferences

Conference Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners, Doctoral Consortium and Blue Sky (AIED 2024), 2024

Applying mathematics to solve authentic question play important roles in mathematics education. How to generate high-quality multiple-choice questions that have authentic context is a great challenge. By combining multiple iterations of large language model dialogues with auxiliary external tools and the LangChain framework, this work presents a novel method for automatically generating contextualized multiple-choice mathematics questions. To check the quality of generated questions, 30 questions were randomly selected and 13 human experts were invited to rate these questions. The survey result indicates that the questions produced by the proposed method exhibit a significantly higher quality compared to those generated directly by GPT4, and are already quite comparable in performance to questions that are meticulously crafted by humans across multiple dimensions. The code is available on the project home page: https://github.com/youzizzz1028/MCQ-generation-Chain.

@inproceedings{2024-2_li_generating, title = {Generating Contextualized Mathematics Multiple-Choice Questions Utilizing Large Language Models}, booktitle = {Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners, Doctoral Consortium and Blue Sky (AIED 2024)}, address = {Cham}, publisher = {Springer Nature Switzerland}, author = {Li, Ruijia and Wang, Yiting and Zheng, Chanjin and Jiang, Yuan-Hao and Jiang, Bo}, year = {2024}, isbn = {978-3-031-64315-6}, doi = {10.1007/978-3-031-64315-6_48}, pages = {494--501}, language = {en}, }

Copied!

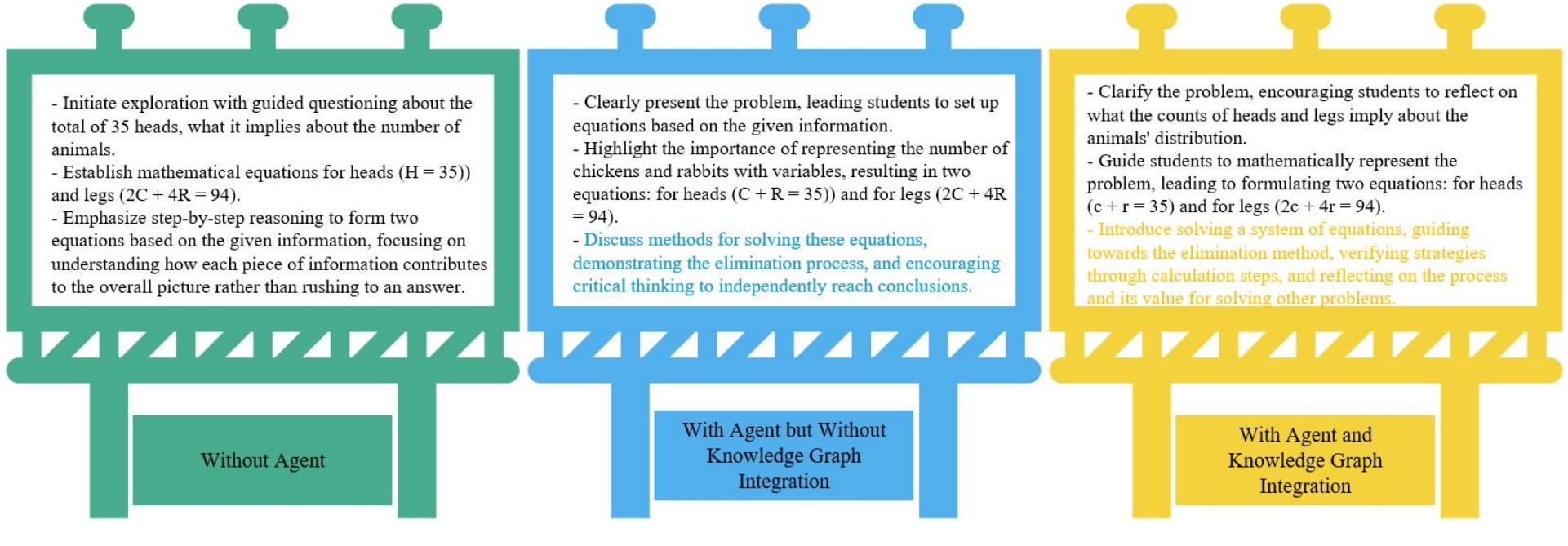

IntelliChain: An Integrated Framework for Enhanced Socratic Method Dialogue with LLMs and Knowledge Graphs

Conference

Conference Proceedings of the 28th Global Chinese Conference on Computers in Education (GCCCE 2024), 2024

With the continuous advancement of educational technology, the demand for Large Language Models (LLMs) as intelligent educational agents in providing personalized learning experiences is rapidly increasing. This study aims to explore how to optimize the design and collaboration of a multi-agent system tailored for Socratic teaching through the integration of LLMs and knowledge graphs in a chain-of-thought dialogue approach, thereby enhancing the accuracy and reliability of educational applications. By incorporating knowledge graphs, this research has bolstered the capability of LLMs to handle specific educational content, ensuring the accuracy and relevance of the information provided. Concurrently, we have focused on developing an effective multi-agent collaboration mechanism to facilitate efficient information exchange and chain dialogues among intelligent agents, significantly improving the quality of educational interaction and learning outcomes. In empirical research within the domain of mathematics education, this framework has demonstrated notable advantages in enhancing the accuracy and credibility of educational interactions. This study not only showcases the potential application of LLMs and knowledge graphs in mathematics teaching but also provides valuable insights and methodologies for the development of future AI-driven educational solutions.

@inproceedings{2024-6_qi_intellichain, title = {IntelliChain: An Integrated Framework for Enhanced Socratic Method Dialogue with LLMs and Knowledge Graphs}, booktitle = {Conference Proceedings of the 28th Global Chinese Conference on Computers in Education (GCCCE 2024)}, address = {Chongqing, China}, publisher = {Global Chinese Conference on Computers in Education}, author = {Qi, Changyong and Jia, Linzhao and Wei, Yuang and Jiang, Yuan-Hao and Gu, Xiaoqing}, year = {2024}, isbn = {978-626-97478-5-6}, doi = {https://doi.org/10.48550/arXiv.2502.00010}, pages = {116--121}, }

Copied!

AI Agent for Education: von Neumann Multi-Agent System Framework

Conference Conference Proceedings of the 28th Global Chinese Conference on Computers in Education (GCCCE 2024), 2024

The development of large language models has ushered in new paradigms for education. This paper centers on the multi-Agent system in education and proposes the von Neumann multi-Agent system framework. It breaks down each AI Agent into four modules: control unit, logic unit, storage unit, and input-output devices, defining four types of operations: task deconstruction, self-reflection, memory processing, and tool invocation. Furthermore, it introduces related technologies such as Chain of Thought, Reson+Act, and Multi-Agent Debate associated with these four types of operations. The paper also discusses the ability enhancement cycle of a multi-Agent system for education, including the outer circulation for human learners to promote knowledge construction and the inner circulation for LLM-based-Agents to enhance swarm intelligence. Through collaboration and reflection, the multi-Agent system can better facilitate human learners' learning and enhance their teaching abilities in this process.

@inproceedings{jiang_ai_2024, title = {AI Agent for Education: von Neumann Multi-Agent System Framework}, booktitle = {Conference Proceedings of the 28th Global Chinese Conference on Computers in Education (GCCCE 2024)}, address = {Chongqing, China}, publisher = {Global Chinese Conference on Computers in Education}, author = {Jiang, Yuan-Hao and Li, Ruijia and Zhou, Yizhou and Qi, Changyong and Hu, Hanglei and Wei, Yuang and Jiang, Bo and Wu, Yonghe}, year = {2024}, isbn = {978-626-97478-5-6}, doi = {10.48550/arXiv.2501.00083}, pages = {77--84}, language = {en-US}, }

Copied!

2023

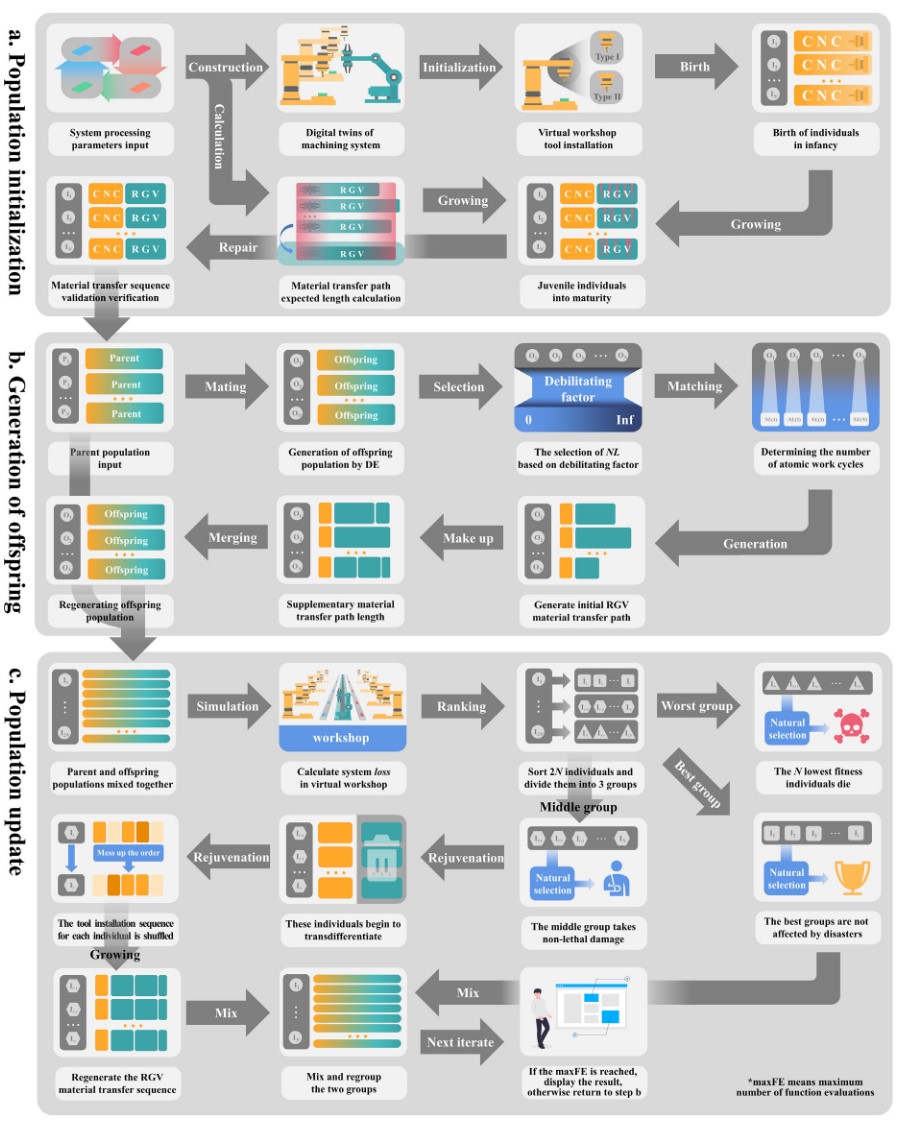

A Control System of Rail-Guided Vehicle Assisted by Transdifferentiation Strategy of Lower Organisms

TOP SCI Q1 IF = 7.5 CCF-C EI-Indexed Journal

Journal Engineering Applications of Artificial Intelligence, 2023

Rail-guided vehicle is a logistics management device widely used to perform various material handling operations instead of manual labor. In processing scenarios, the dimensions of the material transfer path of a rail-guided vehicle are typically very large, which makes the optimization of the material transfer path very difficult. The transdifferentiation behavior of lower organisms was introduced into the evolutionary algorithm, and a large-scale differential evolution algorithm based on the transdifferentiation strategy was proposed, for achieving high-efficiency processing. This strategy makes it possible for some individuals with poor fitness to reach maturity again and be selected for the next iteration after losing some information and returning to their juvenile stage, which helps maintain the diversity of the population. Simulation results show that the proposed algorithm not only achieves an average 25.68% higher output rate than the comparison algorithms on the test cases but also has an excellent and stable effect distribution level on the extended problem space, which shows that the superiority of the proposed algorithm is not affected by the processing parameters. This research is expected to provide technical guidance for the processing of key components in the ship and aviation manufacturing industries.

@article{2023-1_jiang_control, title = {A Control System of Rail-Guided Vehicle Assisted by Transdifferentiation Strategy of Lower Organisms}, volume = {123}, issn = {0952-1976}, doi = {doi.org/10.1016/j.engappai.2023.106353}, language = {en-US}, journal = {Engineering Applications of Artificial Intelligence}, author = {Jiang, Yuan-Hao and Gao, Shang and Yin, Yu-Hang and Xu, Zi-Fan and Wang, Shao-Yong}, month = may, year = {2023}, pages = {106353}, }

Copied!

2022

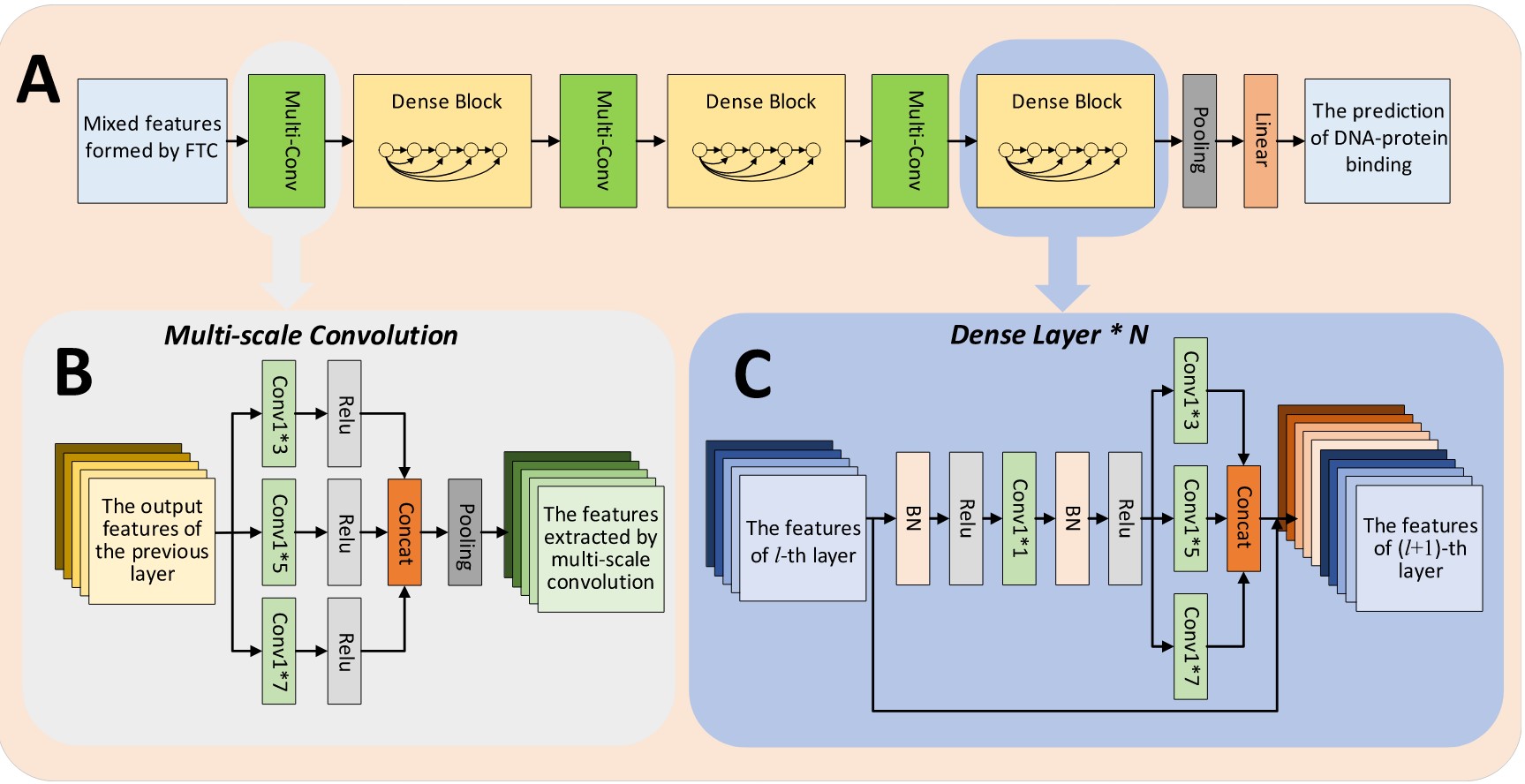

Improving the prediction of DNA-protein binding by integrating multi-scale dense convolutional network with fault-tolerant coding

SCI Q2 EI-Indexed Journal

Journal Analytical Biochemistry, 2022

Accurate prediction of DNA-protein binding (DPB) is of great biological significance for studying the regulatory mechanism of gene expression. In recent years, with the rapid development of deep learning techniques, advanced deep neural networks have been introduced into the field and shown to significantly improve the prediction performance of DPB. However, these methods are primarily based on the DNA sequences measured by the ChIP-seq technology, failing to consider the possible partial variations of the motif sequences and errors of the sequencing technology itself. To address this, we propose a novel computational method, termed MSDenseNet, which combines a new fault-tolerant coding (FTC) scheme with the dense connectional deep neural networks. Three important factors can be attributed to the success of MSDenseNet: First, MSDenseNet utilizes a powerful feature representation approach, which transforms the raw DNA sequence into fusion coding using the fault-tolerant feature sequence; Second, in terms of network structure, MSDenseNet uses a multi-scale convolution within the dense layer and the multi-scale convolution preceding the dense block. This is shown to be able to significantly improve the network performance and accelerate the network convergence speed, and third, building upon the advanced deep neural network, MSDenseNet is capable of effectively mining the hidden complex relationship between the internal attributes of fusion sequence features to enhance the prediction of DPB. Benchmarking experiments on 690 ChIP-seq datasets show that MSDenseNet achieves an average AUC of 0.933 and outperforms the state-of-the-art method. The source code of MSDenseNet is available at https://github.com/csbio-njust-edu/msdensenet. The results show that MSDenseNet can effectively predict DPB. We anticipate that MSDenseNet will be exploited as a powerful tool to facilitate a more exhaustive understanding of DNAbinding proteins and help toward their functional characterization.

@article{2022-1_yin_improving, title = {Improving the prediction of {DNA}-protein binding by integrating multi-scale dense convolutional network with fault-tolerant coding}, volume = {656}, issn = {0003-2697}, doi = {doi.org/10.1016/j.ab.2022.114878}, journal = {Analytical Biochemistry}, author = {Yin, Yu-Hang and Shen, Long-Chen and Jiang, Yuan-Hao and Gao, Shang and Song, Jiangning and Yu, Dong-Jun}, year = {2022}, pages = {114878}, }

Copied!